User Contributed - Making big $$$ with Links

Here is a contributed post by Tobsn. He’s the dude who made the Recommend-It plugin for me awhile back.

Making big $$$ with Links

Over the last few month I was testing out some of the big link selling sites. I wanted to know how much they pay you, in this case me, and how easy it is to get your links sold out.

First of all, I cut it down. I don’t like those freakin’ big postings like Eli does it, I like informations compressed into little pieces. so lets start.

At the beginning I want to reroute the German visitors to teliad.de. It’s big and it works good. The prices are not that high as on TextLinkAds but it works fine for German sites and just btw. I don’t like linklift. Don’t ask me why, i just don’t like them. rest of Europe: sorry nothing else tested. Any hints? Write it down in the comments!

My Test Sites

I tested this with two very simple sites. One is a poker blog with very good bought content like a terms glossary (”full house” etc.), descriptions about the most common types of poker and similar card games and of course a few news about poker 1:1 ripped from poker news sites. The site has around 50 very unique pages. PR4. The other site i want to sell out is a German dept consulting page. You can put you ZIP or city in the search field and you get back dept consulting offices near that point ordered by range. A nice geo search site, well indexed by Google, sorted by state and city. It also has some unique content pages like a glossary etc. We’re talking about something like 50,000 pages on that site. PR5.

Both sites are touched with sprinkles from Adsense and affiliate programs.

Lets get to the big players: LinkWorth vs. TextLinkAds

First, before we come to the highlight TLA, we’re talking a minute about linkworth.

The site looks nice, the features are getting better and the concept probably works. at the end, just not for my sites. I have them in linkworth for about four month and nothing. not one sell. not one single crappy 10 cents link.

Nothing. I played with the options, I did everything I could do but nobody wants a backlink on my sites. Probably it just happens to me and others making billions with 10 sites, I don’t know and at this point i don’t care

Because it doesn’t worked out for me.

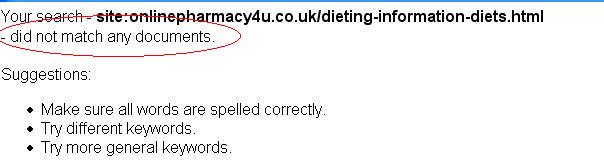

Just while I was writing this someone wanted to place a link on my poker site. one link for $40. This probably saves linkworth from a bad rating. So i visited the “pending” links site to approve the link. On the next site after clicking approve next to the link, linkworth asked me that I have to choose how i build in the links. I selected the Wordpress plugin because its a Wordpress site and with a plugin I don’t have much to care about.

The Maintenance

After selecting the Wordpress plugin and getting the download link on the next site linkworth told me:

“www.somepokerdomain.pl Failed - Please trying again in 24 hrs” - and the link I had to approve was gone.

No love for linkworth. That’s all I have to say.

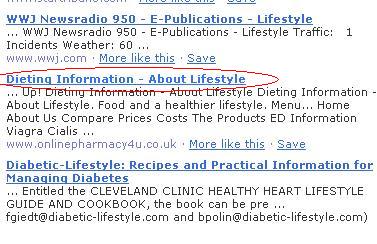

Now lets talk about TextLinkAds - Where you make the big money with crap.

TextLinksAds, yeah I like that sound. They send me so much money each month, it let Larry and Brin look like hobos.

Compared to my richness. seriously, they pay out very good.

In detail that means after the first month it doesn’t happens anything at all but from one day to another i sold out all 8 links and one RSS link on my poker blog in like a week and around 2 weeks for 6 links on the dept consulting page. 8 and 6 links are not sound like the big money. I thought. but i wasn’t aware of how much they get for a good, niche matching, unique content site backlink.

Lets Put It Into Numbers

poker - 9 links - $77

dept - 6 links - $72

That means one link on my dept page gets me $12 and one link on the poker site around $8.5.

I’m impressed. I’m sold out all my links, get nice money for doing nothing. literally nothing. The best conversion I think is the poker blog.

… and now comes the bluehat kung foo …

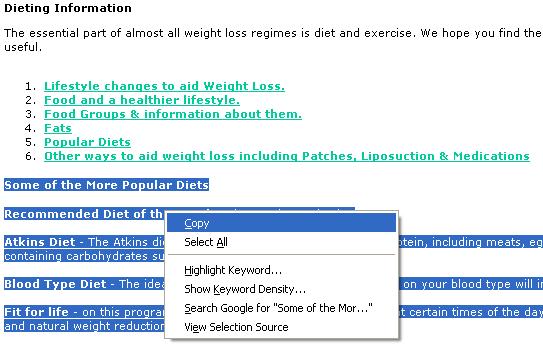

I just set up a wordpress blog on a nice *poker*.com domain, trackback spammed 83 visible backlinks on google, 84 on MSN and 528 visible backlinks shows the site explorer on yahoo.com. I don’t put any effort in it. I just scraped myself a small list of trackback urls and hit the ping button. costs me around 30 minutes.

Now Comes The Interesting Part

A) I figured out that many, many blogs blocking trackbacks with *poker* in the title and/or url. what meant i had to use some other similar words like “card games” or “casino”. So I’m not really good “ranked” on google with major keywords. Sure i got myself a PR4 domain in like two weeks (PR update was shortly after. HA!) but I’m only have good positions with loooong terms. That ends up in not much search engine traffic.

B) you don’t need niche relevant backlinks, probably they get you a slightly higher PR at the end but for now it just counts that you get many backlinks doesn’t care about the keyword you link because you don’t need the search engine traffic.

C) sites with a PR higher than 3 and a serps ruling niche are selling very good at TLA. $8.5 for a crappy backlink on a not really traffic making page, with a lousy PR is, i think, awesome.

That means, if you can get a good PR for a domain, themed for a big subject like poker or mortgage or loans or debts or whatever is overwhelmed by SEO optimized, backlink buying domains on every search engine, you can make nice money very fast.

Lets Take A Calculator

say we have 200 domains with the topic poker. on each we put up Wordpress, fill it with RSS news and also some bought

Content, build in the TextLinkAds for Wordpress plugin for links sidebar and the RSS feed links. Now we get a PR4 for every domain. somehow. magical.

we would earn with just 6 links in the sidebar, based on my $8.5 per link: $10,200

nice, for just setting up stupid domains, doesn’t care about your own backlinks as long as they get your site a high PR…

Just btw. If you need 30 minutes per weblog and work on it 8 hours per day you’ll need 12.5 days to set up 200 blogs. say you take a bit longer, take a month. after that month you could earn a few thousand dollars… with just keeping the PR of your weblogs stable and high as possible.

… and you know what? TLA keeps it real:

My Referral Link For Text Link Ads If You’re Interested In Signing Up Under Me.

(by Tobsn)