My name is Max, I am one of the guys over at WhatRunsWhere.com, we offer the premier online media buying intelligence tool. What I’m going to attempt to do below is teach you how to start media buying on display inventory (banners) with a small budget and little risk.

Media buying is one of the hardest channels of marketing to get into. Usually to do media buys through large sites or networks, you have to shell out quite a bit of cash (usually the 5-10k range to get your foot in the door), but I’m going to show you how to test with a small budget (low risk) and find out what’s working for anyone that wants to get into this highly lucrative space (mo money!). This is how I personally got started media buying and how I scaled my first media buying campaign (it blew up and made me a fair amount of cash). I’m going to talk about two traffic sources that are low risk and can get you in the door and profitable on media buys fast, adbuyer.com first and Sitescout.com secondly (this may be a bit long just warning you guys) – plus a small bonus for those of you that make it all the way through.

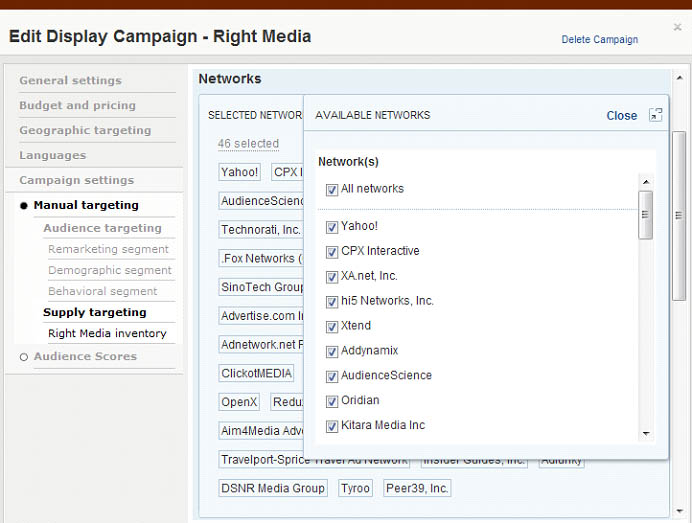

The trick that most people don’t know is exchanges/resellers exist. My favourite site to start testing stuff on is adbuyer.com. I love adbuyer because it sells remnant inventory from right media network, ad networks (remnant inventory is inventory they have left over or don’t sell, so you get it at a discounted rate). Right Media is yahoo’s ad exchange platform so they have inventory from every network that uses that exchange, and allow you to target specific networks in their functions. You can do this by selecting manual targeting instead of their audience score (see image below).

What doing this allows you to do is see what networks are converting for you and which shows promise. You do this by dumping in a test to every network and seeing which ones show promise (conversions) on your limited budget. It allows you to vet out what networks have a shot of working and save a LOT of money and time testing the various networks. Also you can do this on a small starting budget of around $500 instead of say $5000 per network.

You can also limit your risk here by using whatrunswhere.com, if you simply look up some of the networks in here and check what’s already running on them within whatrunswhere.com you can select a range of networks that already have your niches product on them and working.

A big part of media buying is your creative and landing page. Obviously your landing page like any other traffic source needs to be optimized, but your creative is key. A good creative can make or break a campaign. This is because of the bid structure of the media buy, most are run on a CPM (cost per thousand impressions), obviously if you have a banner with a high click through rate (CTR) your cost per click (CPC) will be lower, and you’ll be paying less for interested eyeballs. Testing what creatives give you the best CTR while keeping your CPA low is a main factor to having a successful media buy (later on I’ll put in a product plug and tell you how you can use whatrunswhere.com to do this).

Ok, mini break from adbuyer.com and lets go through how to research and get a creative set to be ready to test. What I’d do is go into whatrunswhere.com and search my niche keywords into our banner ad search. Tons of banners in your niche that are currently running and making money will pop up. From there, I try to spot similarities. What do the most popular banners have in common? Also, by looking up banners on whatrunswhere.com you’ll be able to see how long the banner has been running. Banners that have been seen a lot overtime are probably working, because why would you keep running something as a direct response marketer that wasn’t making you money.

Using this as inspiration, I can build my own set of creatives to start testing with. I like to start broad with very different creatives to see what does best. You honestly would be surprised what far out creative ideas pull in huge CTRs and conversions. It’s about being eye grabbing but also communicating your message properly to your audience.

I like to build my creative set of about 10 or so varying banners in different ad sizes. Personally, 300×250 ads have always done best for me, but the other IAB standard sizes like 160×600 also do well on specific sites as they engage with visitors in different ways.

I like using whatrunswhere.com here because it saves me time and money. By using concepts and ideas from what others are already doing, I can take out a lot of the risk of having to test EVERYTHING and focus on testing what major elements of what’s working, works for my specific campaign.

Once you’ve identified what networks you think may have promise, go contact them directly. You know their remnant inventory is ok, or at least converts for your page/offer. Most likely you won’t be profitable off the bat here (most media buying campaigns don’t make money from day 1, they need to be optimized), but it’s a good starting indicator of what will work (if you are profitable off the bat, jump on those networks asap and start raking in some cash). Example: if you test on adbuyer and see that network A is brining you in conversions at over your CPA but no other networks are bringing in conversions, network A would be a good place to start working on optimizing and buying directly with to get profitable.

When you go direct to the network, there are a few things we need to hammer out with the network to give us the best leverage:

Out clause (how much time you need to give them to pause the campaign if you want to stop it) – try to get a 24 hour out clause or a 48 hour out clause at the latest

Frequency cap (how many times your ad will show to a user per day) – I personally like to frequency cap at 2/24 or 3/24 but you should play around with it yourself

Demographic targeting – If you know the demographics of your offer, the network can try to exclude irrelevant inventory (this will save you money)

CPA – make sure to place pixels and track a cpa, its not like ppc or social, the network needs this data to be able to help you optimize placements

Network buys are like a black box, they do most of the placement optimization (Where your site shows up) but you can always make recommendations based on referrers that you see (where the traffic comes from) in your own tracking.

Ok, onto Sitescout.com. Sitescout is a RTB (real time buying platform). What this means is they take a low margin and broker you out direct site buys from Rubicon Project. This varies from adbuyer.com because instead of buying from a “black box” media network, you can see exactly what sites your buying on and exactly where on those sites you’re buying (but expect to pay higher CPMs on a lot of sites for this privilege). It’s like doing major media buys on large sites, but without the huge insertion orders (IOs) or prepays. You can also literally get started here with just $500.

I like this as well because this is where I REALLY can use whatrunswhere.com to give me a huge competitive edge. Every site has a visitor demographic (the type of people that visit the site), by using whatrunswhere to search what other people in my niche are doing, I can view the exact placements (websites) they’re showing up on. If they’re in the Sitescout repertoire, I could test my campaign on them as well to see if those placements would work, but more importantly, I get a idea of site demographics. Using Quantcast.com, compete.com and alexa.com I can build a pretty solid idea in my head about the demographics of any one targets website. If I notice that a lot of similar demographic websites are being targeted, that tells me something.

I can take that information over to Sitescout and look through the various websites they offer. I can use this to match up my offers key audiences to other sites within Sitescout that have the same demographics to try to maximize my chance of finding sites that work.

Not every website on a direct buy will work, and some will burn out. The key is to find a few nice placements that make you money and milk them for all it’s worth.

Once you’ve found what’s profitable on Sitescout and you’re ready to go to the next level, you can go direct and try to place buys on these websites. This isn’t cheap and should only be done if you’re profitable already. Sitescout takes very thin margins as is, so going direct will only make you marginally more and you have to worry about rotating in multiple offers to stop creative burnout (banner blindness) with that websites audience. Luckily, as I mentioned before, whatrunswhere.com is a kickass tool for finding new creatives to test that are already working and keep your campaigns fresh and visitors noticing them.

Negotiating a direct buy is much like negotiating a network buy (you have to consider all the same factors), but usually your out clause will be a bit longer and they’ll charge much higher cpms (it’s the price you pay for knowing EXACTLY what you’re getting). So you need to make sure it’s worth it, don’t just go in there blind and swinging. I did this when I was starting out and lost quite a bit of money this way before I finally wised up, did what I described above and started being able to profitably media buy ever since (when I started, Sitescout wasn’t around, boy I wish it was).

Get out there and start testing. The truth is most people will read this post and do nothing. The few people that take action and actually test and play around will learn from this testing and start making money from media buys. This is great for them, because this fear of testing sets a high barrier to entry for others meaning its harder for your stuff to get ripped off by any new marketer into the space. The best thing to do is just get your feet wet and start testing

BONUS: for all of you that read this far down the post, here’s a way to test banners on a CPC instead of a CPM. Google content network. You can upload banners here but you’ll pay a premium since your single banner has to have a higher ctr than a whole block of text ads or you have to make Google’s eCPM (how much they make the site per thousand impressions) higher than they would normally get, to get shown frequently. But using their placement specific tools or keyword related sites, you can test your creatives across a large range of related websites, optimize cpms and such all on a budget. Again, whatrunswhere.com is awesome here as it shows you the EXACT placements on the content network where your competitors are buying so you can test them out to, and not waste money testing stuff that you’re not sure if it’s working. It’s a decent way to test out banner ads if you have an even more limited budget to the point where you can’t spend the money testing on sitescout or adbuyer. That being said, adbuyer and sitescout are a lot more affiliate friendly and will let a lot more types of offers and more risky creatives through the network than Google will, so it’s a give and take.

As with all IM tool related posts here is your discount with no affiliate link:

Special Order: https://www.whatrunswhere.com/signup/index2.php?pkg=2

Coupon Code: bhseo

$45 off your first month

Thanks Max for the awesome guest post!

]]>